在当下这场席卷全球的AI热潮中,鲜有故事比利奥波德·阿申布伦纳的经历更引人注目。

这位23岁年轻人的职业生涯开局并不顺利:他曾在萨姆·班克曼-弗里德现已破产的FTX加密货币交易所的慈善部门工作,之后在AI领域最具影响力的公司之一OpenAI度过了颇具争议的一年,并最终被解雇。然而,在被该公司辞退仅两个月后,阿申布伦纳撰写了一份AI宣言,引发网络热议,美国总统唐纳德·特朗普的女儿伊万卡甚至都在社交媒体上为其点赞。随后,他以这份宣言作为跳板,创立了一家对冲基金,如今该基金的资产管理规模超过15亿美元。按照对冲基金的标准,这家基金规模一般,但对于一个刚刚大学毕业的年轻人而言却堪称传奇。从哥伦比亚大学(Columbia)毕业仅四年,阿申布伦纳就已经能与科技公司CEO、投资者和政策制定者私下里侃侃而谈,被他们视为AI时代的“先知”。

阿申布伦纳的崛起令人瞠目,这也让许多人不仅好奇这位出生于德国的年轻AI研究员的成功秘诀,更质疑围绕他的热度是否名副其实。在一些人眼中,阿申布伦纳堪称罕见的天才。他比任何人都更清晰地洞察到时代的关键节点:类人通用人工智能(AGI)的到来、中国在AI竞赛中的加速崛起,以及先行者将收获巨额财富等。但在另一些人看来,包括几位OpenAI前同事,他不过是个幸运的新手,没有任何金融从业记录,只是将一场AI的狂潮重新包装成一份对冲基金的募资说辞。

阿申布伦纳的迅速崛起,展现了硅谷如何将时代精神转化为资本,以及资本又如何进一步转化为影响力。批评者质疑,他创立对冲基金只是为了将那些真伪难辨的科技预言变现获利;但他的朋友们则有着不同的解读。如Anthropic研究员肖尔托·道格拉斯将此视为一种“变革理论”。道格拉斯解释说,阿申布伦纳正在利用这家对冲基金在金融体系中树立起可信的发言地位:“他的意思是——‘我对世界未来的发展方向有着极强的信念,而我正在以真金白银来验证这一信念。’”

但这也引出了一个耐人寻味的问题:为何如此多人愿意信任这位初出茅庐的新秀?

答案并不简单。根据《财富》记者对十余位阿申布伦纳的朋友、前同事、熟人,以及多位投资人和硅谷业内人士的采访,一个主题反复出现:阿申布伦纳善于捕捉那些在硅谷实验室中逐渐积聚势能的理念,并将它们整合成一套连贯而令人信服的叙事体系。对那些风险偏好旺盛的投资者而言,这套叙事就像一份精心搭配的“招牌特餐”,让人难以拒绝。

阿申布伦纳拒绝就本文置评。多位消息人士因担忧谈论在AI圈内具有巨大权力与影响力的人物可能带来风险,要求匿名接受采访。

许多人在谈及阿申布伦纳时,语气中既带着钦佩,也流露出谨慎。“极度专注”、“极其聪明”、“莽撞”、“自信”是他们常用的形容词。不止一人形容他身上带有“神童气质”,正是硅谷历来乐于加冕的那类人物。也有人指出,他的思想并非特别新颖,只是包装巧妙、时机得当。而在批评者看来,他更像是一个制造热度的高手,而非洞察未来的思想家。但《财富》杂志采访的多位投资人却持不同意见,他们认为,阿申布伦纳的文章与早期投资布局展现出非凡的远见卓识。

然而,可以肯定的是,阿申布伦纳的崛起并非偶然,而是多重力量交汇的产物:全球资本正竞相涌入AI赛道;硅谷则痴迷于实现“通用人工智能”,即能与人类智慧相媲美、甚至超越人类的AI;与此同时,地缘政治格局也将AI的发展描绘成一场与中国之间的科技军备竞赛。

勾勒未来

在AI领域的某些小圈子里,利奥波德·阿申布伦纳早已小有名气。早在加入OpenAI之前,他就撰写过多篇在AI安全领域内部流传的博客、论文和研究文章。但对大多数人而言,他几乎是在一夜之间崭露头角。那是在2024年6月,他在网上自费发表了一篇长达165页的专著——《态势感知:未来十年》(Situational Awareness: The Decade Ahead)。这篇长文的标题借用了AI圈内早已熟知的一个术语——“态势感知”,它通常指AI模型开始意识到自身处境,这被视为一种安全风险。而阿申布伦纳却赋予了这一概念全新的含义:他主张政府与投资者必须正视AGI即将到来的速度,以及一旦美国落后所面临的巨大风险。

从某种意义上说,阿申布伦纳希望这份宣言能成为AI时代的“长电报”,就像当年美国外交官、苏联问题专家乔治·凯南在那封著名电报中所做的那样,唤醒美国精英阶层对欧洲面临苏联威胁的警觉。在这篇宣言的引言中,阿申布伦纳描绘了一个他声称只有少数几百名“有先见之明”的人才能看见的未来,“其中大多数人在旧金山及各大AI实验室”。毫不意外,他也将自己归入拥有“态势感知”的那类人;而世界其他地方的人们“对即将到来的冲击毫无察觉”。在大多数人眼中,AI要么只是炒作,要么充其量是又一次互联网级别的技术变革。而阿申布伦纳坚称自己看得更为清晰:大语言模型正以指数级速度进化,快速迈向AGI,并最终超越人类智慧,进入“超级智能”时代——这一进程将带来深远的地缘政治影响,同时也为那些先行者带来本世纪最大规模的经济红利。

为了强调这一点,阿申布伦纳援引了2020年初新冠疫情的例子——他认为,当时只有极少数人真正理解到疫情指数级传播的含义,意识到随之而来的经济冲击的规模,并在市场崩盘前通过做空获利。他写道:“我所能做的只是买口罩并做空市场。”同样地,他强调如今也只有极少数人真正明白AGI到来的速度,而那些先行者将有机会获得历史性收益。而他再次将自己列入有先见之明的少数人之一。

不过,《态势感知》的核心论点并非与新冠疫情的类比,而是数学本身揭示了未来的发展方向:扩展曲线显示,只要在相同的基础算法上不断增加数据量与算力,AI的能力就会呈指数级提升。

道格拉斯现任Anthropic强化学习扩展项目的技术负责人。他是阿申布伦纳的朋友兼前室友,曾多次与他讨论这篇专著的内容。他在接受《财富》杂志采访时表示,这篇文章将许多AI研究人员长期以来的感受凝聚为清晰的论述。道格拉斯表示:“如果我们相信这条趋势线会持续下去,那结局可能会相当疯狂。”与那些专注于每次连续模型发布渐进式改进的人不同,阿申布伦纳愿意“真正押注在指数级增长上”。

一篇走红的文章

关于AI风险与战略,每年都有大量篇幅冗长、内容晦涩的论文在圈子中流传,但大多数论文只是在少数小众论坛上引发短暂争论后便销声匿迹,比如由AI理论家、知名“末日论者”埃利泽·尤德科夫斯基创办的网站LessWrong,这里已成为理性主义与AI安全思想的聚集地。

但《态势感知》带来的冲击却截然不同。曾在OpenAI与阿申布伦纳共事两年的德克萨斯大学奥斯汀分校(UT Austin)计算机科学教授斯科特·亚伦森回忆起自己最初的反应是:“天哪,又来一篇这种文章。”但读完后,他对《财富》杂志表示:“我感觉这篇文章会让某位将军或国家安全官员读到后下令:‘这件事必须立刻采取行动。’”他随后在博客中写道,这篇文章是“我读过的最非凡的作品之一”。亚伦森指出,阿申布伦纳提出的论点是:“即便经历了ChatGPT及其之后的一切,世界仍远未真正意识到即将到来的冲击。”

一位长期从事AI治理研究的专家称这篇文章是“一项了不起的成果”,但同时强调其中的观点并不新鲜:“他基本上是把前沿AI实验室内部早已形成的共识,重新整理成一份包装精良、极具说服力且易于理解的作品。”其结果是,在全球AI讨论达到白热化的时刻,将这种原本只在业内流通的思维,推向更广泛的公众视野。

在AI安全研究员群体中,这篇文章引发了更为激烈的分歧。对这些主要关注AI可能对人类构成生存威胁的研究员而言,阿申布伦纳的作品更像是一种“背叛”,尤其是因为他本人正是出身于这一圈子。许多人认为,他们原本呼吁审慎与监管的论点,被他改造成了一份面向投资者的推销文案。一位前OpenAI治理研究员说道:“一些极度担忧[生存风险]的人现在非常反感利奥波德,因为他们觉得他出卖了理想。”也有人认同他的大部分预测,并认为他将这些预测传播给更广泛受众是有价值的。

但即便是批评者也不得不承认,阿申布伦纳在叙事包装与传播方面有非凡的天赋。另一位前OpenAI研究员表示:“他非常擅长把握时代脉搏,知道人们关心什么、什么内容容易引发热议。这就是他的超能力。他懂得如何通过塑造一种迎合当下情绪的叙事,来吸引有权势者的注意,比如美国必须更加重视AI安全等。即便细节未必准确,时机却拿捏得恰到好处。”

正因把握住了时机,这篇文章几乎令人无法忽视。科技创始人和投资者们以处理热门投资意向书的紧迫感,争相转发《态势感知》;而政策制定者与国家安全官员则像传阅一份最刺激的NSA机密情报评估那样,在内部广泛流传。

正如一位现任OpenAI员工所言,阿申布伦纳的真正本领在于“他总能预判冰球将要滑向哪里。”

宏大叙事与资本运作的结合

就在文章发布的同时,阿申布伦纳创立了以“态势感知”(Situational Awareness LP)为名的对冲基金。这家围绕AGI主题构建的对冲基金,主要投资上市公司而非私营初创企业。

该基金的初始资金来自多位硅谷重量级人物,包括投资人、现任Meta AI产品负责人纳特·弗里德曼。据报道,阿申布伦纳在2023年弗里德曼读过他的一篇博客后与他取得联系。此外还有弗里德曼的投资合伙人丹尼尔·格罗斯以及Stripe联合创始人帕特里克·科里森和约翰·科里森。据称帕特里克·科里森早在2021年由一位关系人安排的私人晚宴上与阿申布伦纳相识,晚宴的目的是让他们交流共同感兴趣的话题。阿申布伦纳还邀请45岁的AI预测与治理研究员卡尔·舒尔曼担任基金研究主管。舒尔曼在AI安全领域人脉深厚,曾在彼得·泰尔旗下的Clarium Capital工作。

在配合基金发布的一档时长四小时的播客中(对谈者为德瓦克什·帕特尔),阿申布伦纳强调了他所预测的AGI到来后的爆炸式增长。他表示“之后的十年同样会极其疯狂”,届时“资本将变得至关重要”。他表示,如果运作得当,“将有丰厚的回报。如果市场从明天起完全消化AGI的预期,你也许能获得百倍回报。”

这份宣言与基金相辅相成:一边是一部篇幅堪比专著的投资论纲,另一边则是一位信念坚定、愿意以真金白银下注的预言家。事实证明,这样的组合对某类投资者而言几乎具有无法抗拒的吸引力。一位前OpenAI研究员指出,弗里德曼素以“把握时代脉搏”著称,他善于支持那些能精准捕捉当下氛围、并将其转化为影响力的人。而支持阿申布伦纳,正完美契合了这一投资逻辑。

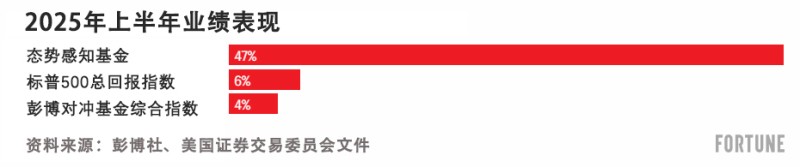

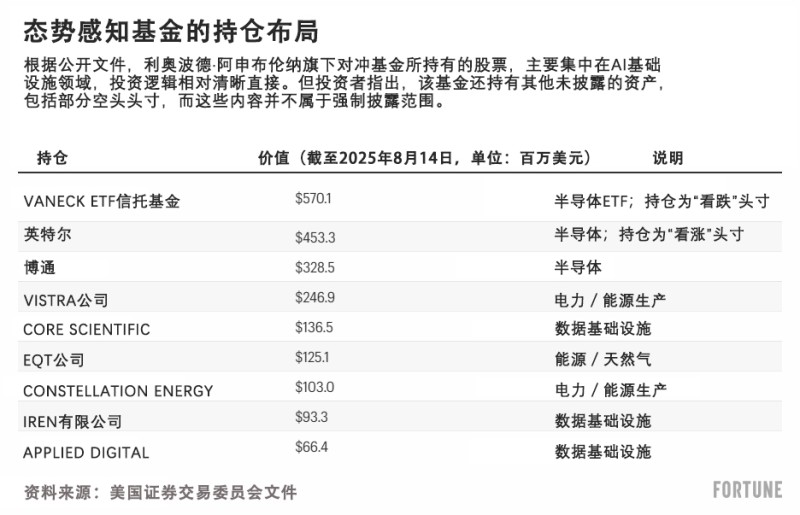

态势感知基金的投资策略相当直接:押注那些有可能从AI浪潮中受益的全球股票,涵盖半导体、基础设施与电力公司,同时做空可能在AI时代落后的行业来对冲风险。公开文件揭示了该基金的部分持仓情况:今年6月提交给美国证券交易委员会(SEC)的文件显示,该基金持有英特尔(Intel)、博通(Broadcom)、Vistra以及前比特币矿企Core Scientific等美国公司的股份。值得注意的是,CoreWeave已于7月宣布将收购Core Scientific。上述企业都被视为AI基础设施建设的主要受益方。到目前为止,这一投资布局已带来回报。该基金的资产规模迅速扩大至超过15亿美元,并在今年上半年实现了47%的净收益(扣除费用后)。

态势感知基金的发言人表示,投资者来自全球各地,包括美国西海岸的创业者、家族理财办公室、机构投资者及捐赠基金等。发言人同时透露,阿申布伦纳“几乎将自己的全部净资产都投入了这只基金”。

当然,任何关于美国对冲基金持仓情况的公开信息都并不完整。公开披露的13F文件仅涵盖在美国上市股票中的多头头寸,而空头、衍生品及海外投资等均无需披露,这也给该基金的真实投资动向增添了一层神秘色彩。尽管如此,一些观察人士质疑,阿申布伦纳的早期业绩究竟源于投资能力,还是纯属时机巧合。例如,该基金在第一季度的申报文件中披露持有约4.59亿美元的英特尔看涨期权。在随后几个月,英特尔股价在获得联邦投资及英伟达(Nvidia)追加50亿美元入股后大幅上涨,这笔持仓显得“颇有先见之明”。

但至少部分经验丰富的金融界人士对他的看法已经有所不同。资深对冲基金投资者格雷厄姆·邓肯以个人身份投资了态势感知基金,现任该基金顾问。邓肯表示,阿申布伦纳兼具业内洞察与大胆投资策略的组合给他留下了深刻印象。邓肯表示:“我觉得他的文章非常有启发性。”他补充道,阿申布伦纳与舒尔曼并非那种在外围寻找机会的局外人,而是基于自身认知亲手投资体系的圈内人。这家基金的投资逻辑让他想起那些在次贷危机爆发前就察觉到风险的少数“逆势投资者”,如因为被迈克尔·刘易斯写进《大空头》(The Big Short)而名声大噪的迈克尔·伯里。邓肯表示:“如果你想拥有差异性认知,保持一点与众不同的心态会有所帮助。”

邓肯举了一个例子:今年1月,中国初创公司深度求索(DeepSeek)发布开源大语言模型R1,尽管中国仍面临资金限制和出口管制等方面的制约,但这一事件被许多人称作中国AI崛起的“斯普特尼克时刻”。邓肯表示,当多数投资者因这则消息陷入恐慌时,阿申布伦纳与舒尔曼早就开始密切关注这一动态,并认为市场抛售是过度反应。两人选择逆势买入,而非跟风卖出。有报道称,当时甚至有一家大型科技基金在分析师一句“利奥波德说没问题”的建议后,放弃了清仓决定。邓肯表示,那一刻奠定了阿申布伦纳在业内的信誉,尽管他也坦言:“他仍有可能被证明是错误的。”

态势感知基金的另一位投资者本人管理着一家领先的对冲基金。他对《财富》杂志表示,当他问阿申布伦纳为何创立专注于AI的对冲基金而非风险投资基金(这似乎是最显而易见的选择)时,阿申布伦纳的回答让他印象深刻。

这位投资者表示:“他说,AGI将对全球经济产生深远影响,而充分利用它的唯一方式就是在全球流动性最强的市场中表达投资观点。他们学习曲线的攀升速度之快让我感到震惊……在公开市场中,他们对AI投资的理解远比我接触过的任何团队都要成熟。”

从哥伦比亚大学“神童”到FTX与OpenAI

阿申布伦纳出生于德国,父母均为医生。他15岁进入哥伦比亚大学就读,19岁以最佳毕业生的身份毕业。一位长期从事AI治理研究、并自称与阿申布伦纳相识的研究员回忆说,她在阿申布伦纳读本科时第一次听说过他的名字。

她表示:“我当时听人提起他说:‘哦,我们听说过这个叫利奥波德·阿申布伦纳的年轻人,他好像很聪明。’感觉他就是一位神童。"

这种“天才少年的”声誉此后愈发巩固。17岁时,阿申布伦纳获得经济学家泰勒·科文创办的新兴风险基金(Emergent Ventures)资助,科文称他为“经济学奇才”。在哥伦比亚大学就读期间,他还在全球优先研究院(Global Priorities Institute)实习,与经济学家菲利普·特拉梅尔合著论文,并为Stripe资助的出版物《Works in Progress》撰写文章,这使他在科技与知识界进一步建立了立足点。

那时的阿申布伦纳,已深度融入“有效利他主义”( Effective Altruism)社群——这是一个以哲学为导向、在AI安全领域颇具影响力、具有争议的运动。他还共同创立了哥伦比亚大学的有效利他主义分会。正是这一人脉网络,最终让他进入了FTX未来基金(FTX Future Fund)。该慈善机构由加密货币交易所创始人山姆·班克曼-弗里德创立。班克曼-弗里德同样是有效利他主义的拥护者,他曾向包括AI治理研究在内的多个与有效利他主义慈善目标一致的领域捐赠数亿美元。

FTX未来基金最初旨在支持与“有效利他主义”理念一致的慈善项目,但后来该基金被揭露其资金来源于班克曼-弗里德的FTX加密货币交易所,这些资金实质上是挪用的用户账户资金。(目前没有证据证明在FTX未来基金工作的任何人知晓资金被盗用,或参与任何违法行为。)

在FTX未来基金期间,阿申布伦纳曾与一个小团队共事,其中包括有效利他主义运动的发起人之一威廉·麦克阿斯基尔以及阿维塔尔·巴尔维特。后者现任Anthropic首席执行官达里奥·阿莫代伊的幕僚长。据态势感知基金的发言人透露,巴尔维特目前已与阿申布伦纳订婚。巴尔维特在2024年6月发表的一篇文章中写道,“未来五年可能是我工作的最后几年”,因为AGI可能“终结我所理解的就业形态”。这一观点恰好与阿申布伦纳坚信的另一面形成鲜明对照——他认为,同样的技术将让他的投资者实现巨额财富增长。

然而,随着班克曼-弗里德的FTX帝国于2022年11月轰然崩塌,FTX未来基金的慈善项目也随之土崩瓦解。阿申布伦纳在接受德瓦克什·帕特尔采访时表示:“我们是一个很小的团队,但从某一天起,一切都不复存在,还被卷入了一场巨大的欺诈案。那段经历极其艰难。”

然而,在FTX倒闭仅仅几个月后,阿申布伦纳再度回到公众视野——这一次是在OpenAI。2023年,他加入了该公司新成立的“超级对齐”团队,致力于研究一个迄今无人真正解决的问题:如何引导并控制未来那些智能水平远超人类、甚至可能超过全人类智慧总和的AI系统。现有方法如“基于人类反馈的强化学习”(RLHF)在当前模型中虽取得一定成效,但其前提是人类能够理解并评估AI的输出,而一旦系统的智能超越人类理解范围,这一前提将不复存在。

德克萨斯大学计算机科学教授亚伦森早于阿申布伦纳加入OpenAI。他表示,阿申布伦纳最令他印象深刻的是那种“立即行动”的天赋。亚伦森当时正致力于为ChatGPT输出内容添加“水印”,以便更容易识别AI生成文本。他表示:“我已经提出了一个方案,但这个想法一直悬而未决。而利奥波德立刻表示:‘是的,我们必须做这件事,我来负责推进。’”

不过,也有人对他的印象截然不同,认为他在政治敏感度上显得笨拙,有时显得傲慢。一位现任OpenAI研究员回忆道:“他从来不怕在会议上说话尖锐,或者惹恼上级,这令我震惊。” 另一位前OpenAI员工表示,自己第一次注意到阿申布伦纳,是在一次公司全员会议上听他发表演讲。那场演讲的主题,后来成为《态势感知》中的核心观点。他形容阿申布伦纳“有点难以相处”。多位研究人员还提到一场假日派对。当时在闲聊的过程中,阿申布伦纳竟直接向时任Scale AI首席执行官汪滔透露了OpenAI所拥有的GPU数量。其中一人表示,他“就那样直接公开说了出来”。两名消息人士告诉《财富》,他们亲耳听到了这番话。他们解释称,许多人对阿申布伦纳如此随意地谈论极为敏感的信息感到震惊。但汪滔和阿申布伦纳均通过发言人否认曾进行过这样的交流。

阿申布伦纳的代表对《财富》杂志表示:“这一说法完全不属实。利奥波德从未与汪滔讨论过任何内部信息。利奥波德经常讨论AI的扩展趋势,例如在《态势感知》中所阐述的内容,这些讨论均基于公开资料和行业趋势。”

2024年4月,OpenAI解雇了阿申布伦纳,给出的官方理由是其“泄露内部信息”(这与他被曝向汪滔透露GPU数量的传闻无关)。两个月后,他在德瓦克什的播客节目中回应称,所谓的“泄密”其实是一份头脑风暴文件,内容涉及 “未来实现AGI过程中所需的准备、安全与防护措施”。他将这份文件分享给了三位外部研究人员征求意见。在当时的OpenAI,这种做法“完全正常”。他还表示,真正导致他被解雇的原因,是他此前撰写的一份内部备忘录。在那份备忘录中,他直言OpenAI的安全体系“严重不足”。

据媒体报道,OpenAI通过发言人回应称,阿申布伦纳在公司内部(包括向董事会)提出的安全担忧“并非导致其离职的原因”。该发言人还表示,公司“不认同他后来关于OpenAI安全问题及其离职情况的诸多说法”。

无论如何,在阿申布伦纳被解雇之际,OpenAI正经历更广泛的动荡:在数周内,由OpenAI联合创始人兼首席科学家伊利亚·苏茨克弗和AI研究员扬·莱克领导、阿申布伦纳曾任职的“超级对齐”团队,因两位负责人先后离职而宣告解散。

两个月后,阿申布伦纳发表了《态势感知》,并推出了他的对冲基金。如此高效的行动,让部分前同事猜测,他可能早在OpenAI任职期间就已开始为此布局。

回报与言论的较量

即便是持怀疑态度的人也承认,阿申布伦纳成功抓住了当下围绕AGI的投资热潮,确实得到了市场回报,然而质疑声依然存在。一位如今创业的前OpenAI同事表示:“我实在想不出,谁会信任一个毫无基金管理经验又如此年轻的人。除非我确信这只基金有非常严格的治理机制,否则我绝不会成为由一个年轻人操盘的基金的有限合伙人。”

也有人质疑他以“AI恐惧”获利的伦理问题。一位前OpenAI研究员表示:“许多人虽然认同利奥波德的论点,但并不赞成他通过渲染中美竞争或借助AGI热潮来筹资,即使这种热潮有其合理性。”另一位研究员则直言:“要么他现在已经不再认为(AI带来的生存风险)是什么大问题,要么他多少有些不够真诚。”

一位“有效利他主义”社群的前策略师表示,该领域的许多人“对他感到不满”,尤其反感他宣扬存在一场“AGI竞赛”,因为这种说法“最终会变成自我实现的预言”。尽管通过煽动“军备竞赛”概念获利可以被合理化,毕竟有效利他主义者将为了未来捐赠而赚钱视为一种美德,但这位前策略师认为“以利奥波德基金的体量来看,他已经在切实地提供资本”,而这本身便具有更沉重的道德分量。

亚伦森指出,更深层的担忧在于:阿申布伦纳所传递的信息——即美国必须不惜一切代价加速发展AI以赢得科技竞赛——恰恰在华盛顿找到了受众。而此时,像马克·安德森、大卫·萨克斯和迈克尔·克拉齐奥斯等“加速主义”代表人物的声音正日益高涨。亚伦森表示:“即便利奥波德本人未必这样认为,他的文章也会被那些持此观点的人所利用。”如果真是如此,那么他留下的最大遗产或许并非一家对冲基金,而是一个纳入全球科技竞赛中的更宏大的思想框架。

倘若这一判断成真,阿申布伦纳的真正影响力将不在于投资回报,而在于话语塑造——他的思想如何从硅谷蔓延至华盛顿,进而影响政策讨论。这也凸显出他故事的核心悖论:在一些人眼中,他是看清时代走向的天才;而在另一些人看来,他则是一个善于操弄叙事、将AI安全焦虑包装成投资推介的“权谋人物”。无论哪种说法正确,如今已有数十亿美元取决于他对AGI的赌局能否成功。(*)

译者:刘进龙

审校:汪皓

在当下这场席卷全球的AI热潮中,鲜有故事比利奥波德·阿申布伦纳的经历更引人注目。

这位23岁年轻人的职业生涯开局并不顺利:他曾在萨姆·班克曼-弗里德现已破产的FTX加密货币交易所的慈善部门工作,之后在AI领域最具影响力的公司之一OpenAI度过了颇具争议的一年,并最终被解雇。然而,在被该公司辞退仅两个月后,阿申布伦纳撰写了一份AI宣言,引发网络热议,美国总统唐纳德·特朗普的女儿伊万卡甚至都在社交媒体上为其点赞。随后,他以这份宣言作为跳板,创立了一家对冲基金,如今该基金的资产管理规模超过15亿美元。按照对冲基金的标准,这家基金规模一般,但对于一个刚刚大学毕业的年轻人而言却堪称传奇。从哥伦比亚大学(Columbia)毕业仅四年,阿申布伦纳就已经能与科技公司CEO、投资者和政策制定者私下里侃侃而谈,被他们视为AI时代的“先知”。

阿申布伦纳的崛起令人瞠目,这也让许多人不仅好奇这位出生于德国的年轻AI研究员的成功秘诀,更质疑围绕他的热度是否名副其实。在一些人眼中,阿申布伦纳堪称罕见的天才。他比任何人都更清晰地洞察到时代的关键节点:类人通用人工智能(AGI)的到来、中国在AI竞赛中的加速崛起,以及先行者将收获巨额财富等。但在另一些人看来,包括几位OpenAI前同事,他不过是个幸运的新手,没有任何金融从业记录,只是将一场AI的狂潮重新包装成一份对冲基金的募资说辞。

阿申布伦纳的迅速崛起,展现了硅谷如何将时代精神转化为资本,以及资本又如何进一步转化为影响力。批评者质疑,他创立对冲基金只是为了将那些真伪难辨的科技预言变现获利;但他的朋友们则有着不同的解读。如Anthropic研究员肖尔托·道格拉斯将此视为一种“变革理论”。道格拉斯解释说,阿申布伦纳正在利用这家对冲基金在金融体系中树立起可信的发言地位:“他的意思是——‘我对世界未来的发展方向有着极强的信念,而我正在以真金白银来验证这一信念。’”

但这也引出了一个耐人寻味的问题:为何如此多人愿意信任这位初出茅庐的新秀?

答案并不简单。根据《财富》记者对十余位阿申布伦纳的朋友、前同事、熟人,以及多位投资人和硅谷业内人士的采访,一个主题反复出现:阿申布伦纳善于捕捉那些在硅谷实验室中逐渐积聚势能的理念,并将它们整合成一套连贯而令人信服的叙事体系。对那些风险偏好旺盛的投资者而言,这套叙事就像一份精心搭配的“招牌特餐”,让人难以拒绝。

阿申布伦纳拒绝就本文置评。多位消息人士因担忧谈论在AI圈内具有巨大权力与影响力的人物可能带来风险,要求匿名接受采访。

许多人在谈及阿申布伦纳时,语气中既带着钦佩,也流露出谨慎。“极度专注”、“极其聪明”、“莽撞”、“自信”是他们常用的形容词。不止一人形容他身上带有“神童气质”,正是硅谷历来乐于加冕的那类人物。也有人指出,他的思想并非特别新颖,只是包装巧妙、时机得当。而在批评者看来,他更像是一个制造热度的高手,而非洞察未来的思想家。但《财富》杂志采访的多位投资人却持不同意见,他们认为,阿申布伦纳的文章与早期投资布局展现出非凡的远见卓识。

然而,可以肯定的是,阿申布伦纳的崛起并非偶然,而是多重力量交汇的产物:全球资本正竞相涌入AI赛道;硅谷则痴迷于实现“通用人工智能”,即能与人类智慧相媲美、甚至超越人类的AI;与此同时,地缘政治格局也将AI的发展描绘成一场与中国之间的科技军备竞赛。

勾勒未来

在AI领域的某些小圈子里,利奥波德·阿申布伦纳早已小有名气。早在加入OpenAI之前,他就撰写过多篇在AI安全领域内部流传的博客、论文和研究文章。但对大多数人而言,他几乎是在一夜之间崭露头角。那是在2024年6月,他在网上自费发表了一篇长达165页的专著——《态势感知:未来十年》(Situational Awareness: The Decade Ahead)。这篇长文的标题借用了AI圈内早已熟知的一个术语——“态势感知”,它通常指AI模型开始意识到自身处境,这被视为一种安全风险。而阿申布伦纳却赋予了这一概念全新的含义:他主张政府与投资者必须正视AGI即将到来的速度,以及一旦美国落后所面临的巨大风险。

从某种意义上说,阿申布伦纳希望这份宣言能成为AI时代的“长电报”,就像当年美国外交官、苏联问题专家乔治·凯南在那封著名电报中所做的那样,唤醒美国精英阶层对欧洲面临苏联威胁的警觉。在这篇宣言的引言中,阿申布伦纳描绘了一个他声称只有少数几百名“有先见之明”的人才能看见的未来,“其中大多数人在旧金山及各大AI实验室”。毫不意外,他也将自己归入拥有“态势感知”的那类人;而世界其他地方的人们“对即将到来的冲击毫无察觉”。在大多数人眼中,AI要么只是炒作,要么充其量是又一次互联网级别的技术变革。而阿申布伦纳坚称自己看得更为清晰:大语言模型正以指数级速度进化,快速迈向AGI,并最终超越人类智慧,进入“超级智能”时代——这一进程将带来深远的地缘政治影响,同时也为那些先行者带来本世纪最大规模的经济红利。

为了强调这一点,阿申布伦纳援引了2020年初新冠疫情的例子——他认为,当时只有极少数人真正理解到疫情指数级传播的含义,意识到随之而来的经济冲击的规模,并在市场崩盘前通过做空获利。他写道:“我所能做的只是买口罩并做空市场。”同样地,他强调如今也只有极少数人真正明白AGI到来的速度,而那些先行者将有机会获得历史性收益。而他再次将自己列入有先见之明的少数人之一。

不过,《态势感知》的核心论点并非与新冠疫情的类比,而是数学本身揭示了未来的发展方向:扩展曲线显示,只要在相同的基础算法上不断增加数据量与算力,AI的能力就会呈指数级提升。

道格拉斯现任Anthropic强化学习扩展项目的技术负责人。他是阿申布伦纳的朋友兼前室友,曾多次与他讨论这篇专著的内容。他在接受《财富》杂志采访时表示,这篇文章将许多AI研究人员长期以来的感受凝聚为清晰的论述。道格拉斯表示:“如果我们相信这条趋势线会持续下去,那结局可能会相当疯狂。”与那些专注于每次连续模型发布渐进式改进的人不同,阿申布伦纳愿意“真正押注在指数级增长上”。

一篇走红的文章

关于AI风险与战略,每年都有大量篇幅冗长、内容晦涩的论文在圈子中流传,但大多数论文只是在少数小众论坛上引发短暂争论后便销声匿迹,比如由AI理论家、知名“末日论者”埃利泽·尤德科夫斯基创办的网站LessWrong,这里已成为理性主义与AI安全思想的聚集地。

但《态势感知》带来的冲击却截然不同。曾在OpenAI与阿申布伦纳共事两年的德克萨斯大学奥斯汀分校(UT Austin)计算机科学教授斯科特·亚伦森回忆起自己最初的反应是:“天哪,又来一篇这种文章。”但读完后,他对《财富》杂志表示:“我感觉这篇文章会让某位将军或国家安全官员读到后下令:‘这件事必须立刻采取行动。’”他随后在博客中写道,这篇文章是“我读过的最非凡的作品之一”。亚伦森指出,阿申布伦纳提出的论点是:“即便经历了ChatGPT及其之后的一切,世界仍远未真正意识到即将到来的冲击。”

一位长期从事AI治理研究的专家称这篇文章是“一项了不起的成果”,但同时强调其中的观点并不新鲜:“他基本上是把前沿AI实验室内部早已形成的共识,重新整理成一份包装精良、极具说服力且易于理解的作品。”其结果是,在全球AI讨论达到白热化的时刻,将这种原本只在业内流通的思维,推向更广泛的公众视野。

在AI安全研究员群体中,这篇文章引发了更为激烈的分歧。对这些主要关注AI可能对人类构成生存威胁的研究员而言,阿申布伦纳的作品更像是一种“背叛”,尤其是因为他本人正是出身于这一圈子。许多人认为,他们原本呼吁审慎与监管的论点,被他改造成了一份面向投资者的推销文案。一位前OpenAI治理研究员说道:“一些极度担忧[生存风险]的人现在非常反感利奥波德,因为他们觉得他出卖了理想。”也有人认同他的大部分预测,并认为他将这些预测传播给更广泛受众是有价值的。

但即便是批评者也不得不承认,阿申布伦纳在叙事包装与传播方面有非凡的天赋。另一位前OpenAI研究员表示:“他非常擅长把握时代脉搏,知道人们关心什么、什么内容容易引发热议。这就是他的超能力。他懂得如何通过塑造一种迎合当下情绪的叙事,来吸引有权势者的注意,比如美国必须更加重视AI安全等。即便细节未必准确,时机却拿捏得恰到好处。”

正因把握住了时机,这篇文章几乎令人无法忽视。科技创始人和投资者们以处理热门投资意向书的紧迫感,争相转发《态势感知》;而政策制定者与国家安全官员则像传阅一份最刺激的NSA机密情报评估那样,在内部广泛流传。

正如一位现任OpenAI员工所言,阿申布伦纳的真正本领在于“他总能预判冰球将要滑向哪里。”

宏大叙事与资本运作的结合

就在文章发布的同时,阿申布伦纳创立了以“态势感知”(Situational Awareness LP)为名的对冲基金。这家围绕AGI主题构建的对冲基金,主要投资上市公司而非私营初创企业。

该基金的初始资金来自多位硅谷重量级人物,包括投资人、现任Meta AI产品负责人纳特·弗里德曼。据报道,阿申布伦纳在2023年弗里德曼读过他的一篇博客后与他取得联系。此外还有弗里德曼的投资合伙人丹尼尔·格罗斯以及Stripe联合创始人帕特里克·科里森和约翰·科里森。据称帕特里克·科里森早在2021年由一位关系人安排的私人晚宴上与阿申布伦纳相识,晚宴的目的是让他们交流共同感兴趣的话题。阿申布伦纳还邀请45岁的AI预测与治理研究员卡尔·舒尔曼担任基金研究主管。舒尔曼在AI安全领域人脉深厚,曾在彼得·泰尔旗下的Clarium Capital工作。

在配合基金发布的一档时长四小时的播客中(对谈者为德瓦克什·帕特尔),阿申布伦纳强调了他所预测的AGI到来后的爆炸式增长。他表示“之后的十年同样会极其疯狂”,届时“资本将变得至关重要”。他表示,如果运作得当,“将有丰厚的回报。如果市场从明天起完全消化AGI的预期,你也许能获得百倍回报。”

这份宣言与基金相辅相成:一边是一部篇幅堪比专著的投资论纲,另一边则是一位信念坚定、愿意以真金白银下注的预言家。事实证明,这样的组合对某类投资者而言几乎具有无法抗拒的吸引力。一位前OpenAI研究员指出,弗里德曼素以“把握时代脉搏”著称,他善于支持那些能精准捕捉当下氛围、并将其转化为影响力的人。而支持阿申布伦纳,正完美契合了这一投资逻辑。

态势感知基金的投资策略相当直接:押注那些有可能从AI浪潮中受益的全球股票,涵盖半导体、基础设施与电力公司,同时做空可能在AI时代落后的行业来对冲风险。公开文件揭示了该基金的部分持仓情况:今年6月提交给美国证券交易委员会(SEC)的文件显示,该基金持有英特尔(Intel)、博通(Broadcom)、Vistra以及前比特币矿企Core Scientific等美国公司的股份。值得注意的是,CoreWeave已于7月宣布将收购Core Scientific。上述企业都被视为AI基础设施建设的主要受益方。到目前为止,这一投资布局已带来回报。该基金的资产规模迅速扩大至超过15亿美元,并在今年上半年实现了47%的净收益(扣除费用后)。

态势感知基金的发言人表示,投资者来自全球各地,包括美国西海岸的创业者、家族理财办公室、机构投资者及捐赠基金等。发言人同时透露,阿申布伦纳“几乎将自己的全部净资产都投入了这只基金”。

当然,任何关于美国对冲基金持仓情况的公开信息都并不完整。公开披露的13F文件仅涵盖在美国上市股票中的多头头寸,而空头、衍生品及海外投资等均无需披露,这也给该基金的真实投资动向增添了一层神秘色彩。尽管如此,一些观察人士质疑,阿申布伦纳的早期业绩究竟源于投资能力,还是纯属时机巧合。例如,该基金在第一季度的申报文件中披露持有约4.59亿美元的英特尔看涨期权。在随后几个月,英特尔股价在获得联邦投资及英伟达(Nvidia)追加50亿美元入股后大幅上涨,这笔持仓显得“颇有先见之明”。

但至少部分经验丰富的金融界人士对他的看法已经有所不同。资深对冲基金投资者格雷厄姆·邓肯以个人身份投资了态势感知基金,现任该基金顾问。邓肯表示,阿申布伦纳兼具业内洞察与大胆投资策略的组合给他留下了深刻印象。邓肯表示:“我觉得他的文章非常有启发性。”他补充道,阿申布伦纳与舒尔曼并非那种在外围寻找机会的局外人,而是基于自身认知亲手投资体系的圈内人。这家基金的投资逻辑让他想起那些在次贷危机爆发前就察觉到风险的少数“逆势投资者”,如因为被迈克尔·刘易斯写进《大空头》(The Big Short)而名声大噪的迈克尔·伯里。邓肯表示:“如果你想拥有差异性认知,保持一点与众不同的心态会有所帮助。”

邓肯举了一个例子:今年1月,中国初创公司深度求索(DeepSeek)发布开源大语言模型R1,尽管中国仍面临资金限制和出口管制等方面的制约,但这一事件被许多人称作中国AI崛起的“斯普特尼克时刻”。邓肯表示,当多数投资者因这则消息陷入恐慌时,阿申布伦纳与舒尔曼早就开始密切关注这一动态,并认为市场抛售是过度反应。两人选择逆势买入,而非跟风卖出。有报道称,当时甚至有一家大型科技基金在分析师一句“利奥波德说没问题”的建议后,放弃了清仓决定。邓肯表示,那一刻奠定了阿申布伦纳在业内的信誉,尽管他也坦言:“他仍有可能被证明是错误的。”

态势感知基金的另一位投资者本人管理着一家领先的对冲基金。他对《财富》杂志表示,当他问阿申布伦纳为何创立专注于AI的对冲基金而非风险投资基金(这似乎是最显而易见的选择)时,阿申布伦纳的回答让他印象深刻。

这位投资者表示:“他说,AGI将对全球经济产生深远影响,而充分利用它的唯一方式就是在全球流动性最强的市场中表达投资观点。他们学习曲线的攀升速度之快让我感到震惊……在公开市场中,他们对AI投资的理解远比我接触过的任何团队都要成熟。”

从哥伦比亚大学“神童”到FTX与OpenAI

阿申布伦纳出生于德国,父母均为医生。他15岁进入哥伦比亚大学就读,19岁以最佳毕业生的身份毕业。一位长期从事AI治理研究、并自称与阿申布伦纳相识的研究员回忆说,她在阿申布伦纳读本科时第一次听说过他的名字。

她表示:“我当时听人提起他说:‘哦,我们听说过这个叫利奥波德·阿申布伦纳的年轻人,他好像很聪明。’感觉他就是一位神童。"

这种“天才少年的”声誉此后愈发巩固。17岁时,阿申布伦纳获得经济学家泰勒·科文创办的新兴风险基金(Emergent Ventures)资助,科文称他为“经济学奇才”。在哥伦比亚大学就读期间,他还在全球优先研究院(Global Priorities Institute)实习,与经济学家菲利普·特拉梅尔合著论文,并为Stripe资助的出版物《Works in Progress》撰写文章,这使他在科技与知识界进一步建立了立足点。

那时的阿申布伦纳,已深度融入“有效利他主义”( Effective Altruism)社群——这是一个以哲学为导向、在AI安全领域颇具影响力、具有争议的运动。他还共同创立了哥伦比亚大学的有效利他主义分会。正是这一人脉网络,最终让他进入了FTX未来基金(FTX Future Fund)。该慈善机构由加密货币交易所创始人山姆·班克曼-弗里德创立。班克曼-弗里德同样是有效利他主义的拥护者,他曾向包括AI治理研究在内的多个与有效利他主义慈善目标一致的领域捐赠数亿美元。

FTX未来基金最初旨在支持与“有效利他主义”理念一致的慈善项目,但后来该基金被揭露其资金来源于班克曼-弗里德的FTX加密货币交易所,这些资金实质上是挪用的用户账户资金。(目前没有证据证明在FTX未来基金工作的任何人知晓资金被盗用,或参与任何违法行为。)

在FTX未来基金期间,阿申布伦纳曾与一个小团队共事,其中包括有效利他主义运动的发起人之一威廉·麦克阿斯基尔以及阿维塔尔·巴尔维特。后者现任Anthropic首席执行官达里奥·阿莫代伊的幕僚长。据态势感知基金的发言人透露,巴尔维特目前已与阿申布伦纳订婚。巴尔维特在2024年6月发表的一篇文章中写道,“未来五年可能是我工作的最后几年”,因为AGI可能“终结我所理解的就业形态”。这一观点恰好与阿申布伦纳坚信的另一面形成鲜明对照——他认为,同样的技术将让他的投资者实现巨额财富增长。

然而,随着班克曼-弗里德的FTX帝国于2022年11月轰然崩塌,FTX未来基金的慈善项目也随之土崩瓦解。阿申布伦纳在接受德瓦克什·帕特尔采访时表示:“我们是一个很小的团队,但从某一天起,一切都不复存在,还被卷入了一场巨大的欺诈案。那段经历极其艰难。”

然而,在FTX倒闭仅仅几个月后,阿申布伦纳再度回到公众视野——这一次是在OpenAI。2023年,他加入了该公司新成立的“超级对齐”团队,致力于研究一个迄今无人真正解决的问题:如何引导并控制未来那些智能水平远超人类、甚至可能超过全人类智慧总和的AI系统。现有方法如“基于人类反馈的强化学习”(RLHF)在当前模型中虽取得一定成效,但其前提是人类能够理解并评估AI的输出,而一旦系统的智能超越人类理解范围,这一前提将不复存在。

德克萨斯大学计算机科学教授亚伦森早于阿申布伦纳加入OpenAI。他表示,阿申布伦纳最令他印象深刻的是那种“立即行动”的天赋。亚伦森当时正致力于为ChatGPT输出内容添加“水印”,以便更容易识别AI生成文本。他表示:“我已经提出了一个方案,但这个想法一直悬而未决。而利奥波德立刻表示:‘是的,我们必须做这件事,我来负责推进。’”

不过,也有人对他的印象截然不同,认为他在政治敏感度上显得笨拙,有时显得傲慢。一位现任OpenAI研究员回忆道:“他从来不怕在会议上说话尖锐,或者惹恼上级,这令我震惊。” 另一位前OpenAI员工表示,自己第一次注意到阿申布伦纳,是在一次公司全员会议上听他发表演讲。那场演讲的主题,后来成为《态势感知》中的核心观点。他形容阿申布伦纳“有点难以相处”。多位研究人员还提到一场假日派对。当时在闲聊的过程中,阿申布伦纳竟直接向时任Scale AI首席执行官汪滔透露了OpenAI所拥有的GPU数量。其中一人表示,他“就那样直接公开说了出来”。两名消息人士告诉《财富》,他们亲耳听到了这番话。他们解释称,许多人对阿申布伦纳如此随意地谈论极为敏感的信息感到震惊。但汪滔和阿申布伦纳均通过发言人否认曾进行过这样的交流。

阿申布伦纳的代表对《财富》杂志表示:“这一说法完全不属实。利奥波德从未与汪滔讨论过任何内部信息。利奥波德经常讨论AI的扩展趋势,例如在《态势感知》中所阐述的内容,这些讨论均基于公开资料和行业趋势。”

2024年4月,OpenAI解雇了阿申布伦纳,给出的官方理由是其“泄露内部信息”(这与他被曝向汪滔透露GPU数量的传闻无关)。两个月后,他在德瓦克什的播客节目中回应称,所谓的“泄密”其实是一份头脑风暴文件,内容涉及 “未来实现AGI过程中所需的准备、安全与防护措施”。他将这份文件分享给了三位外部研究人员征求意见。在当时的OpenAI,这种做法“完全正常”。他还表示,真正导致他被解雇的原因,是他此前撰写的一份内部备忘录。在那份备忘录中,他直言OpenAI的安全体系“严重不足”。

据媒体报道,OpenAI通过发言人回应称,阿申布伦纳在公司内部(包括向董事会)提出的安全担忧“并非导致其离职的原因”。该发言人还表示,公司“不认同他后来关于OpenAI安全问题及其离职情况的诸多说法”。

无论如何,在阿申布伦纳被解雇之际,OpenAI正经历更广泛的动荡:在数周内,由OpenAI联合创始人兼首席科学家伊利亚·苏茨克弗和AI研究员扬·莱克领导、阿申布伦纳曾任职的“超级对齐”团队,因两位负责人先后离职而宣告解散。

两个月后,阿申布伦纳发表了《态势感知》,并推出了他的对冲基金。如此高效的行动,让部分前同事猜测,他可能早在OpenAI任职期间就已开始为此布局。

回报与言论的较量

即便是持怀疑态度的人也承认,阿申布伦纳成功抓住了当下围绕AGI的投资热潮,确实得到了市场回报,然而质疑声依然存在。一位如今创业的前OpenAI同事表示:“我实在想不出,谁会信任一个毫无基金管理经验又如此年轻的人。除非我确信这只基金有非常严格的治理机制,否则我绝不会成为由一个年轻人操盘的基金的有限合伙人。”

也有人质疑他以“AI恐惧”获利的伦理问题。一位前OpenAI研究员表示:“许多人虽然认同利奥波德的论点,但并不赞成他通过渲染中美竞争或借助AGI热潮来筹资,即使这种热潮有其合理性。”另一位研究员则直言:“要么他现在已经不再认为(AI带来的生存风险)是什么大问题,要么他多少有些不够真诚。”

一位“有效利他主义”社群的前策略师表示,该领域的许多人“对他感到不满”,尤其反感他宣扬存在一场“AGI竞赛”,因为这种说法“最终会变成自我实现的预言”。尽管通过煽动“军备竞赛”概念获利可以被合理化,毕竟有效利他主义者将为了未来捐赠而赚钱视为一种美德,但这位前策略师认为“以利奥波德基金的体量来看,他已经在切实地提供资本”,而这本身便具有更沉重的道德分量。

亚伦森指出,更深层的担忧在于:阿申布伦纳所传递的信息——即美国必须不惜一切代价加速发展AI以赢得科技竞赛——恰恰在华盛顿找到了受众。而此时,像马克·安德森、大卫·萨克斯和迈克尔·克拉齐奥斯等“加速主义”代表人物的声音正日益高涨。亚伦森表示:“即便利奥波德本人未必这样认为,他的文章也会被那些持此观点的人所利用。”如果真是如此,那么他留下的最大遗产或许并非一家对冲基金,而是一个纳入全球科技竞赛中的更宏大的思想框架。

倘若这一判断成真,阿申布伦纳的真正影响力将不在于投资回报,而在于话语塑造——他的思想如何从硅谷蔓延至华盛顿,进而影响政策讨论。这也凸显出他故事的核心悖论:在一些人眼中,他是看清时代走向的天才;而在另一些人看来,他则是一个善于操弄叙事、将AI安全焦虑包装成投资推介的“权谋人物”。无论哪种说法正确,如今已有数十亿美元取决于他对AGI的赌局能否成功。(*)

译者:刘进龙

审校:汪皓

Of all the unlikely stories to emerge from the current AI frenzy, few are more striking than that of Leopold Aschenbrenner.

The 23-year-old’s career didn’t exactly start auspiciously: He spent time at the philanthropy arm of Sam Bankman-Fried’s now-bankrupt FTX cryptocurrency exchange before a controversial year at OpenAI, where he was ultimately fired. Then, just two months after being booted out of the most influential company in AI, he penned an AI manifesto that went viral—President Trump’s daughter Ivanka even praised it on social media—and used it as a launching pad for a hedge fund that now manages more than $1.5 billion. That’s modest by hedge-fund standards but remarkable for someone barely out of college. Just four years after graduating from Columbia, Aschenbrenner is holding private discussions with tech CEOs, investors, and policymakers who treat him as a kind of prophet of the AI age.

It’s an astonishing ascent, one that has many asking not just how this German-born early-career AI researcher pulled it off, but whether the hype surrounding him matches the reality. To some, Aschenbrenner is a rare genius who saw the moment—the coming of humanlike artificial general intelligence, China’s accelerating AI race, and the vast fortunes awaiting those who move first—more clearly than anyone else. To others, including several former OpenAI colleagues, he’s a lucky novice with no finance track record, repackaging hype into a hedge fund pitch.

His meteoric rise captures how Silicon Valley converts zeitgeist into capital—and how that, in turn, can be parlayed into influence. While critics question whether launching a hedge fund was simply a way to turn dubious techno-prophecy into profit, friends like Anthropic researcher Sholto Douglas frame it differently—as a “theory of change.” Aschenbrenner is using the hedge fund to garner a credible voice in the financial ecosystem, Douglas explained: “He is saying, ‘I have an extremely high conviction [that this is] how the world is going to evolve, and I am literally putting my money where my mouth is.”

But that also prompts the question: Why are so many willing to trust this newcomer?

The answer is complicated. In conversations with over a dozen friends, former colleagues, and acquaintances of Aschenbrenner, as well as investors and Silicon Valley insiders, one theme keeps surfacing: that Aschenbrenner has been able to seize ideas that have been gathering momentum across Silicon Valley’s labs and use them as ingredients for a coherent and convincing narrative that are like a blue plate special to investors with a healthy appetite for risk.

Aschenbrenner declined to comment for this story. A number of sources were granted anonymity owing to concerns over the potential consequences of speaking about people who wield considerable power and influence in AI circles.

Many spoke of Aschenbrenner with a mixture of admiration and wariness—“intense,” “scarily smart,” “brash,” “confident.” More than one described him as carrying the aura of a wunderkind, the kind of figure Silicon Valley has long been eager to anoint. Others, however, noted that his thinking wasn’t especially novel, just unusually well-packaged and well-timed. Yet, while critics dismiss him as more hype than insight, investors Fortune spoke with see him differently, crediting his essays and early portfolio bets with unusual foresight.

There is no doubt, however, that Aschenbrenner’s rise reflects a unique convergence: vast pools of global capital eager to ride the AI wave; a Valley enthralled by the prospect of achieving artificial general intelligence (AGI), or AI that matches or surpasses human intelligence; and a geopolitical backdrop that frames AI development as a technological arms race with China.

Sketching the future

Within certain corners of the AI world, Leopold Aschenbrenner was already familiar as someone who had written blog posts, essays, and research papers that circulated among AI safety circles, even before joining OpenAI. But for most people, he appeared seemingly overnight in June 2024. That’s when he self-published online a 165-page monograph called Situational Awareness: The Decade Ahead. The long essay borrowed for its title a phrase already familiar in AI circles, where “situational awareness” usually refers to models becoming aware of their own circumstances—a safety risk. But Aschenbrenner used it to mean something else entirely: the need for governments and investors to recognize how quickly AGI might arrive, and what was at stake if the U.S. fell behind.

In a sense, Aschenbrenner intended his manifesto to be the AI era’s equivalent of George Kennan’s “Long Telegram,” in which the American diplomat and Russia expert sought to awaken elite opinion in the U.S. to what he saw as the looming Soviet threat to Europe. In the introduction, Aschenbrenner sketched a future he claimed was visible only to a few hundred prescient people, “most of them in San Francisco and the AI labs.” Not surprisingly, he included himself among those with “situational awareness,” while the rest of the world had “not the faintest glimmer of what is about to hit them.” To most, AI looked like hype or, at best, another internet-scale shift. What he insisted he could see more clearly was that LLMs were improving at an exponential rate, scaling rapidly toward AGI, and then beyond to “superintelligence”—with geopolitical consequences and, for those who moved early, the chance to capture the biggest economic windfall of the century.

To drive the point home, he invoked the example of COVID in early 2020—arguing that only a few grasped the implications of a pandemic’s exponential spread, understood the scope of the coming economic shock, and profited by shorting before the crash. “All I could do is buy masks and short the market,” he wrote. Similarly, he emphasized that only a small circle today comprehends how quickly AGI is coming, and those who act early stand to capture historic gains. And once again, he cast himself among the prescient few.

But the core of Situational Awareness’s argument wasn’t the COVID parallel. It was the argument that the math itself—the scaling curves that suggested AI capabilities increased exponentially with the amount of data and compute thrown at the same basic algorithms—showed where things were headed.

Douglas, now a tech lead on scaling reinforcement learning at Anthropic, is both a friend and former roommate of Aschenbrenner’s who had conversations with him about the monograph. He told Fortune that the essay crystallized what many AI researchers had felt. ”If we believe that the trend line will continue, then we end up in some pretty wild places,” Douglas said. Unlike many who focused on the incremental progress of each successive model release, Aschenbrenner was willing to “really bet on the exponential,” he said.

An essay goes viral

Plenty of long, dense essays about AI risk and strategy circulate every year, most vanishing after brief debates in niche forums like LessWrong, a website founded by AI theorist and “doomer” extraordinaire Eliezer Yudkowsky that became a hub for rationalist and AI-safety ideas.

But Situational Awareness hit differently. Scott Aaronson, a computer science professor at UT Austin who spent two years at OpenAI overlapping with Aschenbrenner, remembered his initial reaction: “Oh man, another one.” But after reading, he told Fortune: “I had the sense that this is actually the document some general or national security person is going to read and say: ‘This requires action.’” In a blog post, he called the essay “one of the most extraordinary documents I’ve ever read,” saying Aschenbrenner “makes a case that, even after ChatGPT and all that followed it, the world still hasn’t come close to ‘pricing in’ what’s about to hit it.”

A longtime AI governance expert described the essays as “a big achievement,” but emphasized that the ideas were not new: “He basically took what was already common wisdom inside frontier AI labs and wrote it up in a very nicely packaged, compelling, easy-to-consume way.” The result was to make insider thinking legible to a much broader audience at a fever-pitch moment in the AI conversation.

Among AI safety researchers, who worry primarily about the ways in which AI might pose an existential risk to humanity, the essays were more divisive. For many, Aschenbrenner’s work felt like a betrayal, particularly because he had come out of those very circles. They felt their arguments urging caution and regulation had been repurposed into a sales pitch to investors. “Some people who are very worried about [existential risks] quite dislike Leopold now because of what he’s done—they basically think he sold out,” said one former OpenAI governance researcher. Others agreed with most of his predictions and saw value in amplifying them.

Still, even critics conceded his knack for packaging and marketing. “He’s very good at understanding the zeitgeist—what people are interested in and what could go viral,” said another former OpenAI researcher. “That’s his superpower. He knew how to capture the attention of powerful people by articulating a narrative very favorable to the mood of the moment: that the U.S. needed to beat China, that we needed to take AI security more seriously. Even if the details were wrong, the timing was perfect.”

That timing made the essays unavoidable. Tech founders and investors shared Situational Awareness with the sort of urgency usually reserved for hot term sheets, while policymakers and national security officials circulated it like the juiciest classified NSA assessment.

As one current OpenAI staffer put it, Aschenbrenner’s skill is “knowing where the puck is skating.”

A sweeping narrative paired with an investment vehicle

At the same time as the essays were released, Aschenbrenner launched Situational Awareness LP, a hedge fund built around the theme of AGI, with its bets placed in publicly traded companies rather than private startups.

The fund was seeded by Silicon Valley heavyweights like investor and current Meta AI product lead Nat Friedman—Aschenbrenner reportedly connected with him after Friedman read one of his blog posts in 2023—as well as Friedman’s investing partner Daniel Gross, and Patrick and John Collison, Stripe’s cofounders. Patrick Collison reportedly met Aschenbrenner at a 2021 dinner set up by a connection “to discuss their shared interests.” Aschenbrenner also brought on Carl Shulman—a 45-year-old AI forecaster and governance researcher with deep ties in the AI safety field and a past stint at Peter Thiel’s Clarium Capital—to be the new hedge fund’s director of research.

In a four-hour podcast with Dwarkesh Patel tied to the launch, Aschenbrenner touted the explosive growth he expects once AGI arrives, saying, “The decade after is also going to be wild,” in which “capital will really matter.” If done right, he said, “there’s a lot of money to be made. If AGI were priced in tomorrow, you could maybe make 100x.”

Together, the manifesto and the fund reinforced each other: Here was a book-length investment thesis paired with a prognosticator with so much conviction he was willing to put serious money on the line. It proved an irresistible combination to a certain kind of investor. One former OpenAI researcher said Friedman is known for “zeitgeist hacking”—backing people who could capture the mood of the moment and amplify it into influence. Supporting Aschenbrenner fit that playbook perfectly.

Situational Awareness’s strategy is straightforward: It bets on global stocks likely to benefit from AI—semiconductors, infrastructure, and power companies—offset by shorts on industries that could lag behind. Public filings reveal part of the portfolio: A June SEC filing showed stakes in U.S. companies including Intel, Broadcom, Vistra, and former Bitcoin-miner Core Scientific (which CoreWeave announced it would acquire in July), all seen as beneficiaries of the AI build-out. So far, it has paid off: The fund quickly swelled to over $1.5 billion in assets and delivered 47% gains, after fees, in the first half of this year.

According to a spokesperson, Situational Awareness LP has global investors, including West Coast founders, family offices, institutions, and endowments. In addition, the spokesperson said, Aschenbrenner “has almost all of his net worth invested in the fund.”

To be sure, any picture of a U.S. hedge fund’s holdings is incomplete. The publicly available 13F filings only cover long positions in U.S.-listed stocks—shorts, derivatives, and international investments aren’t disclosed—adding an inevitable layer of mystery around what the fund is really betting on. Still, some observers have questioned whether Aschenbrenner’s early results reflect skill or fortunate timing. For example, his fund disclosed roughly $459 million in Intel call options in its first-quarter filing—positions that later looked prescient when Intel’s shares climbed over the summer following a federal investment and a subsequent $5 billion stake from Nvidia.

But at least some experienced financial industry professionals have come to view him differently. Veteran hedge fund investor Graham Duncan, who invested personally in Situational Awareness LP and now serves as an advisor to the fund, said he was struck by Aschenbrenner’s combination of insider perspective and bold investment strategy. “I found his paper provocative,” Duncan said, adding that Aschenbrenner and Shulman weren’t outsiders scanning opportunities but insiders building an investment vehicle around their view. The fund’s thesis reminded him of the few contrarians who spotted the subprime collapse before it hit—people like Michael Burry, whom Michael Lewis made famous in his book The Big Short. “If you want to have variant perception, it helps to be a little variant.”

He pointed to Situational Awareness’s reaction to Chinese startup DeepSeek’s January release of its R1 open-source LLM, which many dubbed a “Sputnik moment” that showcased China’s rising AI capabilities despite limited funding and export controls. While most investors panicked, he said Aschenbrenner and Shulman had already been tracking it and saw the selloff as an overreaction. They bought instead of sold, and even a major tech fund reportedly held back from dumping shares after an analyst said, “Leopold says it’s fine.” That moment, Duncan said, cemented Aschenbrenner’s credibility—though Duncan acknowledged, “He could yet be proven wrong.”

Another investor in Situational Awareness LP, who manages a leading hedge fund, told Fortune that he was struck by Aschenbrenner’s answer when asked why he was starting a hedge fund focused on AI rather than a VC fund, which seemed like the most obvious choice.

“He said that AGI was going to be so impactful to the global economy that the only way to fully capitalize on it was to express investment ideas in the most liquid markets in the world,” he said. “I am a bit stunned by how fast they have come up the learning curve … They are way more sophisticated on AI investing than anyone else I speak to in the public markets.“

A Columbia ‘whiz kid’ who went on to FTX and OpenAI

Aschenbrenner, born in Germany to two doctors, enrolled at Columbia when he was just 15 and graduated valedictorian at 19. The longtime AI governance researcher, who described herself as an acquaintance of Aschenbrenner’s, recalled that she first heard of him when he was still an undergraduate.

“I heard about him as, ‘Oh, we heard about this Leopold Aschenbrenner kid, he seems like a sharp guy,’” she said. “The vibe was very much a whiz kid sort of thing.”

That wunderkind reputation only deepened. At 17, Aschenbrenner won a grant from economist Tyler Cowen’s Emergent Ventures, and Cowen called him an “economics prodigy.” While still at Columbia, Aschenbrenner also interned at the Global Priorities Institute, coauthoring a paper with economist Philip Trammell, and contributed essays to Works in Progress, a Stripe-funded publication that gave him another foothold in the tech-intellectual world.

He was already embedded in the Effective Altruism community—a controversial philosophy-driven movement influential in AI safety circles—and cofounded Columbia’s EA chapter. That network eventually led him to a job at the FTX Future Fund, a charity founded by cryptocurrency exchange founder Sam Bankman-Fried. Bankman-Fried was another EA adherent who donated hundreds of millions of dollars to causes, including AI governance research, that aligned with EA’s philanthropic priorities.

The FTX Future Fund was designed to support EA-aligned philanthropic priorities, although it was later found to have used money from Bankman-Fried’s FTX cryptocurrency exchange that was essentially looted from account holders. (There is no evidence that anyone who worked at the FTX Future Fund knew the money was stolen or did anything illegal.)

At the FTX Future Fund, Aschenbrenner worked with a small team that included William MacAskill, a cofounder of Effective Altruism, and Avital Balwit—now chief of staff to Anthropic CEO Dario Amodei and, according to a Situational Awareness LP spokesperson, currently engaged to Aschenbrenner. Balwit wrote in a June 2024 essay that “these next five years might be the last few years that I work,” because AGI might “end employment as I know it”—a striking mirror image of Aschenbrenner’s conviction that the same technology will make his investors rich.

But when Bankman-Fried’s FTX empire collapsed in November 2022, the Future Fund philanthropic effort imploded. “We were a tiny team, and then from one day to the next, it was all gone and associated with a giant fraud,” Aschenbrenner told Dwarkesh Patel. “That was incredibly tough.”

Just months after FTX collapsed, however, Aschenbrenner reemerged—at OpenAI. He joined the company’s newly launched “superalignment” team in 2023, created to tackle a problem no one yet knows how to solve: how to steer and control future AI systems that would be far smarter than any human being, and perhaps smarter than all of humanity put together. Existing methods like reinforcement learning from human feedback (RLHF) had proven somewhat effective for today’s models, but they depend on humans being able to evaluate outputs—something which might not be possible if systems surpassed human comprehension.

Aaronson, the UT computer science professor, joined OpenAI before Aschenbrenner and said what impressed him was Aschenbrenner’s instinct to act. Aaronson had been working on watermarking ChatGPT outputs to make AI-generated text easier to identify. “I had a proposal for how to do that, but the idea was just sort of languishing,” he said. “Leopold immediately started saying, ‘Yes, we should be doing this, I’m going to take responsibility for pushing it.’”

Others remembered him differently, as politically clumsy and sometimes arrogant. “He was never afraid to be astringent at meetings or piss off the higher-ups, to a degree I found alarming,” said one current OpenAI researcher. A former OpenAI staffer, who said they first became aware of Aschenbrenner when he gave a talk at a company all-hands meeting that previewed themes he would later publish in Situational Awareness, recalled him as “a bit abrasive.” Multiple researchers also described a holiday party where, in a casual group discussion, Aschenbrenner told then Scale AI CEO Alexandr Wang how many GPUs OpenAI had—“just straight out in the open,” as one put it. Two people told Fortune they had directly overheard the remark. A number of people were taken aback, they explained, at how casually Aschenbrenner shared something so sensitive. Through spokespeople, both Wang and Aschenbrenner denied that the exchange occurred.

“This account is entirely false,” a representative of Aschenbrenner told Fortune. “Leopold never discussed private information with Alex. Leopold often discusses AI scaling trends such as in Situational Awareness, based on public information and industry trends.”

In April 2024, OpenAI fired Aschenbrenner, officially citing the leaking of internal information (the incident was not related to the alleged GPU remarks to Wang). On the Dwarkesh podcast two months later, Aschenbrenner maintained the “leak” was “a brainstorming document on preparedness, safety, and security measures needed in the future on the path to AGI” that he shared with three external researchers for feedback—something he said was “totally normal” at OpenAI at the time. He argued that an earlier memo in which he said OpenAI’s security was “egregiously insufficient to protect against the theft of model weights or key algorithmic secrets from foreign actors” was the real reason for his dismissal.

According to news reports, OpenAI did respond, via a spokesperson, that the concerns about security that he raised internally (including to the board) “did not lead to his separation.” The spokesperson also said they “disagree with many of the claims he has since made” about OpenAI’s security and the circumstances of his departure.

Either way, Aschenbrenner’s ouster came amid broader turmoil: Within weeks, OpenAI’s “superalignment” team—led by OpenAI’s cofounder and chief scientist Ilya Sutskever and AI researcher Jan Leike, and where Aschenbrenner had worked—dissolved after both leaders departed from the company.

Two months later, Aschenbrenner published Situational Awareness and unveiled his hedge fund. The speed of the rollout prompted speculation among some former colleagues that he had been laying the groundwork while still at OpenAI.

Returns vs. rhetoric

Even skeptics acknowledge the market has rewarded Aschenbrenner for channeling today’s AGI hype, but still, doubts linger. “I can’t think of anybody that would trust somebody that young with no prior fund management [experience],” said a former OpenAI colleague who is now a founder. “I would not be an LP in a fund drawn by a child unless I felt there was really strong governance in place.”

Others question the ethics of profiting from AI fears. “Many agree with Leopold’s arguments, but disapprove of stoking the U.S.-China race or raising money based off AGI hype, even if the hype is justified,” said one former OpenAI researcher. “Either he no longer thinks that [the existential risk from AI] is a big deal or he is arguably being disingenuous,” said another.

One former strategist within the Effective Altruism community said many in that world “are annoyed with him,” particularly for promoting the narrative that there’s a “race to AGI” that “becomes a self-fulfilling prophecy.” While profiting from stoking the idea of an arms race can be rationalized—since Effective Altruists often view making money for the purpose of then giving it away as virtuous—the former strategist argued that “at the level of Leopold’s fund, you’re meaningfully providing capital,” and that carries more moral weight.

The deeper worry, said Aaronson, is that Aschenbrenner’s message—that the U.S. must accelerate the pace of AI development at all costs in order to beat China—has landed in Washington at a moment when accelerationist voices like Marc Andreessen, David Sacks, and Michael Kratsios are ascendant. “Even if Leopold doesn’t believe that, his essay will be used by people who do,” Aaronson said. If so, his biggest legacy may not be a hedge fund, but a broader intellectual framework that is helping to cement a technological Cold War between the U.S. and China.

If that proves true, Aschenbrenner’s real impact may be less about returns and more about rhetoric—the way his ideas have rippled from Silicon Valley into Washington. It underscores the paradox at the center of his story: To some, he’s a genius who saw the moment more clearly than anyone else. To others, he’s a Machiavellian figure who repackaged insider safety worries into an investor pitch. Either way, billions are now riding on whether his bet on AGI delivers.